I had the pleasure to attend an Alan November (also blogs here) lecture today as a pre-session of the CASE conference. I attended with a number of colleagues (the Superintendent, COO, Chief of Ed Programs, CAO, Director of ELA, and Director of Technical Ed). Alan November has long been considered a guru of educational technology and technology integration. He has been a beacon for many school districts and has held the attention of leaders in and out of technology in a very positive way.

That said, the presentation I attended today was uninspired and lacked a discernible focus. It started with the typical Freidman approach of pointing out that the world is shrinking and there are more gifted students in China than there are children in America. This is a tried and true mechanism for grabbing our isolationist/nationalist attention and making us pay attention. He dropped one other shocker on us: electronic whiteboards that we all love have been proven to have a negative effect on education by dumbing down the curriculum. I know that got the attention of the attendees because I later heard our COO relaying that fact to some community colleagues that are leading the design of our new high school/community college campus. Alan November wanted to get our attention with the whiteboard research and simultaneously point out that teaching with technology is not about technology it is about good teaching. That makes great sense, right?

Here's the rub: (1) the attention getter wasn't exactly what it appeared to be. (2) After arguing that teaching is the key he went on to demonstrate his superior web searching ability.

First, the BBC news report on the whiteboard evaluation was vague. It did not provide a link to the actual study, did not reveal what metrics were used, and ignored the relationship between professional development and classroom use of this (powerful) tool. What is really compelling is that the BBC site where this article was published has a "comment" component. The comments are thorough and point out the flaws of the study. If Alan November had been the least bit thoughtful he would not have used this study as an attention grabber, but would have pointed out that the read/write web is amazing at giving both sides of a biased storied. When readers comment they point the shortcomings of the research or journalism (or both). Alan November sadly missed an opportunity to make a point and instead went for the cheap crowd pleaser. Now people all over Colorado will be saying, "whiteboards have a negative effect on education, they dumb down the curriculum, Alan November says so." This is an example of Web 1.0 where information is given one direction and the consumer accepts it.

Second, Alan November spent the three and half hours I attended his session showing us what a great internet searcher he is. Did you know that if you use "host: uk" you get only sites from the United Kingdom? Okay, we get it, now move on. Nope. "host: tr:, "host: za", "host: ma" and on and on. We did a Skype call to New Orleans to en employee of November Learning...hey, that was cool in 2005. Wikipedia...neat. Even cited research that the NY Times did that wikipedia has an average of 4 errors per article while Brittanica has 3. No real difference, right? However, I think the research was done by Nature and Britannica disputes the results.

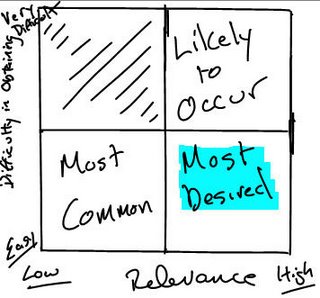

The world is different. Neat. Unfortunately, the technology that was focused on (wikipedia, searching, podcasting, skype, wikis, blogs, RSS) are all pretty old at this point. Frankly, I expect an empassioned presentation on the vision for a new world with real practical strategies for us to achieve this vision.

That said, the presentation I attended today was uninspired and lacked a discernible focus. It started with the typical Freidman approach of pointing out that the world is shrinking and there are more gifted students in China than there are children in America. This is a tried and true mechanism for grabbing our isolationist/nationalist attention and making us pay attention. He dropped one other shocker on us: electronic whiteboards that we all love have been proven to have a negative effect on education by dumbing down the curriculum. I know that got the attention of the attendees because I later heard our COO relaying that fact to some community colleagues that are leading the design of our new high school/community college campus. Alan November wanted to get our attention with the whiteboard research and simultaneously point out that teaching with technology is not about technology it is about good teaching. That makes great sense, right?

Here's the rub: (1) the attention getter wasn't exactly what it appeared to be. (2) After arguing that teaching is the key he went on to demonstrate his superior web searching ability.

First, the BBC news report on the whiteboard evaluation was vague. It did not provide a link to the actual study, did not reveal what metrics were used, and ignored the relationship between professional development and classroom use of this (powerful) tool. What is really compelling is that the BBC site where this article was published has a "comment" component. The comments are thorough and point out the flaws of the study. If Alan November had been the least bit thoughtful he would not have used this study as an attention grabber, but would have pointed out that the read/write web is amazing at giving both sides of a biased storied. When readers comment they point the shortcomings of the research or journalism (or both). Alan November sadly missed an opportunity to make a point and instead went for the cheap crowd pleaser. Now people all over Colorado will be saying, "whiteboards have a negative effect on education, they dumb down the curriculum, Alan November says so." This is an example of Web 1.0 where information is given one direction and the consumer accepts it.

Second, Alan November spent the three and half hours I attended his session showing us what a great internet searcher he is. Did you know that if you use "host: uk" you get only sites from the United Kingdom? Okay, we get it, now move on. Nope. "host: tr:, "host: za", "host: ma" and on and on. We did a Skype call to New Orleans to en employee of November Learning...hey, that was cool in 2005. Wikipedia...neat. Even cited research that the NY Times did that wikipedia has an average of 4 errors per article while Brittanica has 3. No real difference, right? However, I think the research was done by Nature and Britannica disputes the results.

The world is different. Neat. Unfortunately, the technology that was focused on (wikipedia, searching, podcasting, skype, wikis, blogs, RSS) are all pretty old at this point. Frankly, I expect an empassioned presentation on the vision for a new world with real practical strategies for us to achieve this vision.